Hi there,

Welcome to the 386 new subscribers who have joined us since the last newsletter!

This issue is packed with the latest insights on GA4 and BigQuery. Let's dive into what's new and noteworthy.

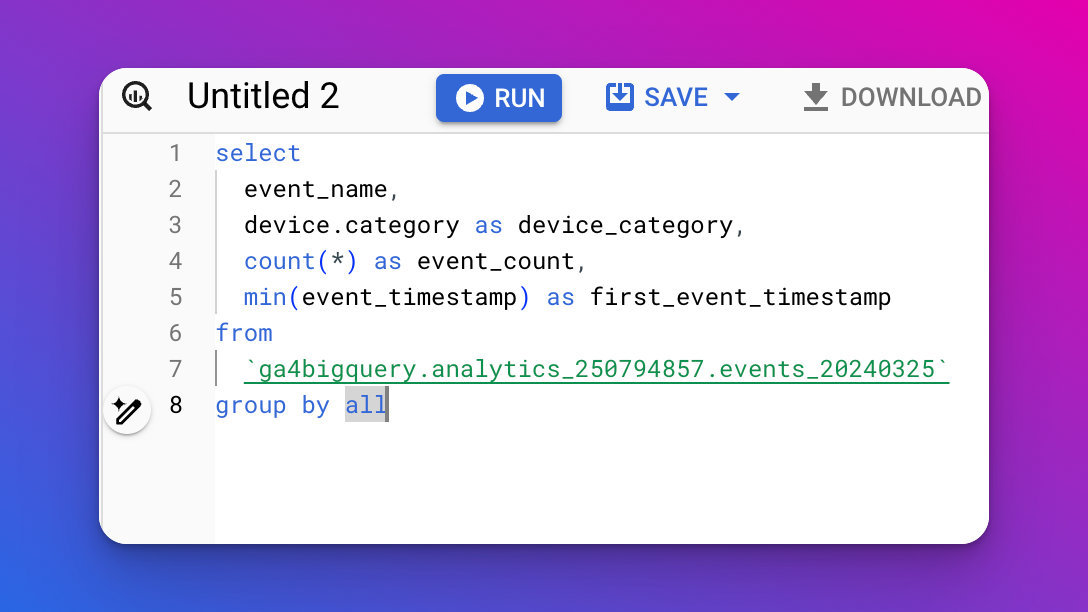

Exploring the new group by all clause

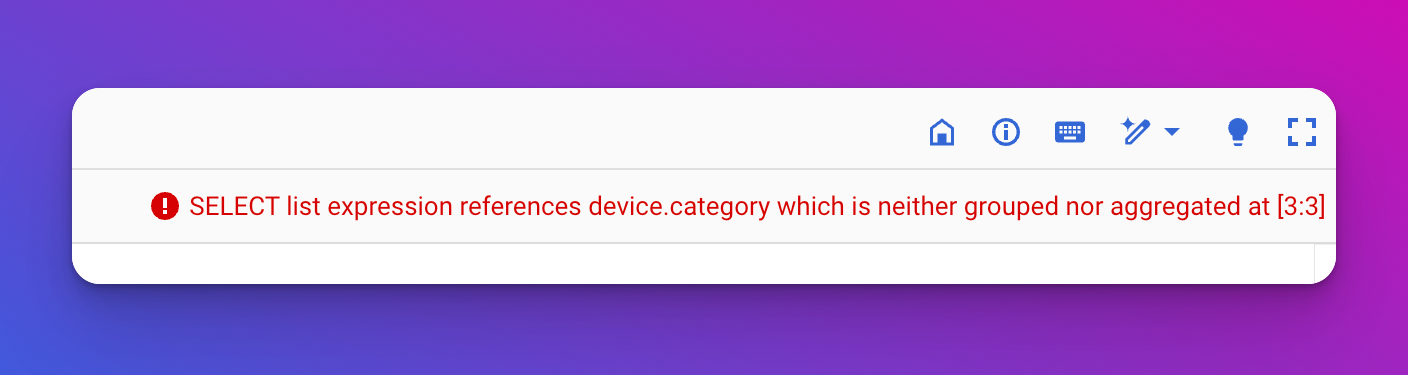

One of the most common (and annoying) errors encountered in the BigQuery UI when drafting SQL queries is likely the SELECT list expression references ... which is neither grouped nor aggregated at [...] This typically occurs when you are aggregating data but forget to group by one or more dimensions.

Fortunately, the BigQuery product team, taking cues from other databases such as Snowflake, has introduced the group by all clause. This feature fundamentally enables automatic grouping of rows by deducing grouping keys directly from the select items, thereby simplifying the query-writing process and enhancing its intuitiveness.

According to the documentation, the group by all clause excludes certain select items from grouping, such as expressions that include aggregate functions, window functions and constants. Moreover, if any remaining select item includes a volatile function (e.g. rand() or current_timestamp) or has a non-groupable type, an error is produced, ensuring that only compatible and relevant data is grouped.

Although using group by all is tempting for everyday tasks, you should exercise caution when implementing it in a production environment. As Axel Thevenot states:

In my opinion, GROUP BY ALL is a double-edged sword. It offers flexibility and so much peace while building our queries. But the risk of incorrect results is too important for me when it comes to production. In SQL for me, if you want it, you write it. It always will be preferable to specify what you want to avoid unintentional errors. This is why I also avoid the "GROUP BY 1, 2, 3" approach. (source)

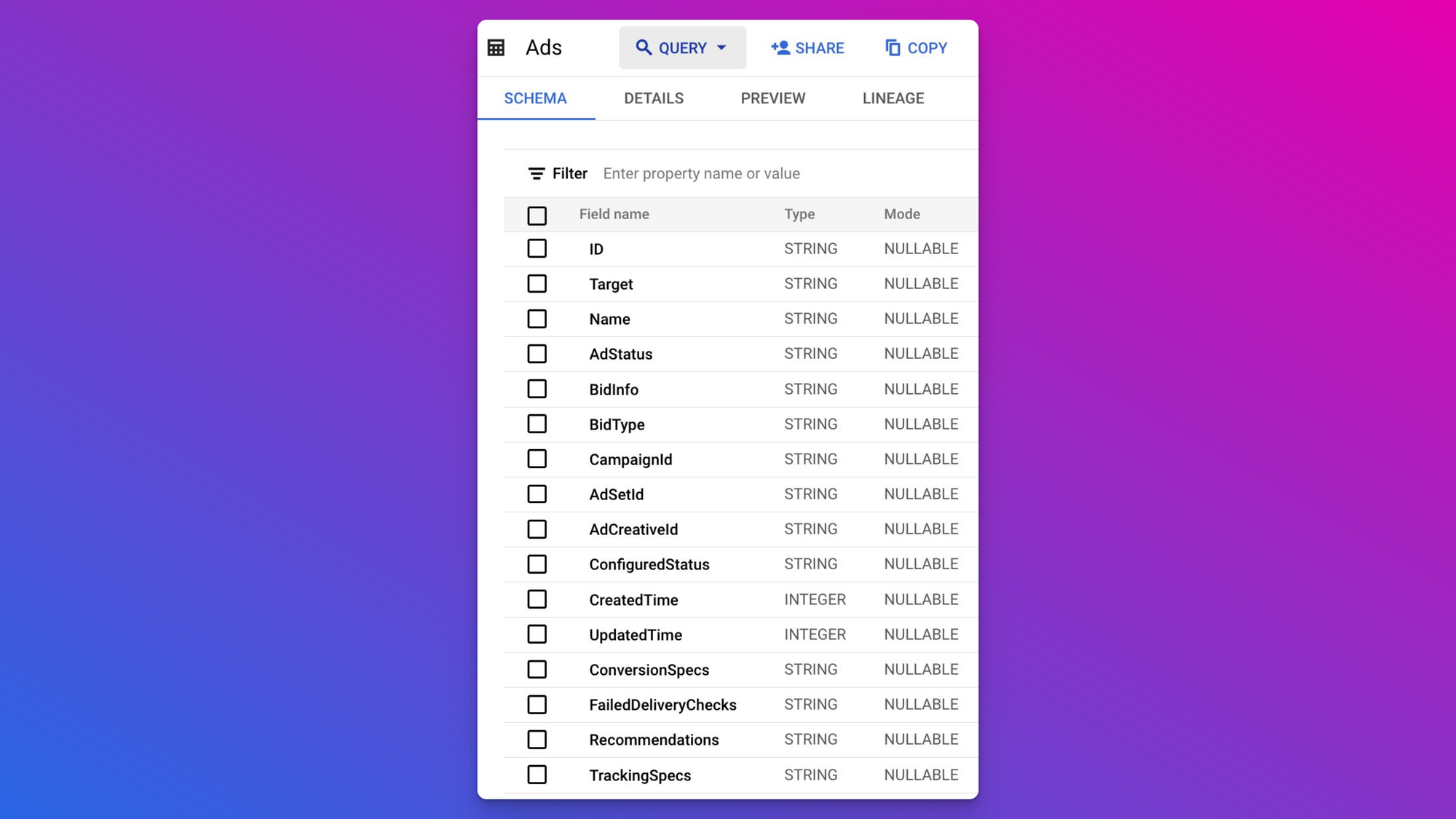

Facebook Ads & Salesforce (Marketing Cloud) added to BigQuery Data Transfer

The BigQuery Data Transfer Service is an in-built tool designed for transferring data from various sources such as Google Ads, YouTube, Google Merchant Center, and cloud storages like Amazon S3 and Azure Blob Storage into BigQuery.

Recently, the capability to perform data transfers from Facebook Ads, Salesforce (Marketing Cloud), Oracle and ServiceNow has been added, broadening the scope of data integration possibilities within BigQuery.

By leveraging these transfer services, organizations can automate the ingestion of critical marketing data into BigQuery, without the need of third-party solutions. While these features are in preview, they offer a glimpse into the future potential for seamless data integration within the Google Cloud ecosystem.

Automatic enablement of BigQuery Studio APIs

Starting in March 2024, Google Cloud projects with BigQuery will have APIs supporting BigQuery Studio capabilities automatically activated. These include Analytics Hub API and Dataform API.

Most users do not have to take action on this, unless you have automation scripts dependent on the status of these APIs.

Be assured: the automatic activation will not result in extra charges. See documentation.

Other BigQuery updates

- The ability to perform anomaly detection with BigQuery ML is now in preview. This feature enables you to detect anomalies in historical time series data or in new data with multiple feature columns.

- You can now undelete a dataset that is within your time travel window (default: the last 7 days) to recover it to the state that it was in when it was deleted.

- The minimum duration between scheduled queries has been reduced from 15 minutes to 5 minutes.

Relevant blog posts and resources from the community

- GA4 BigQuery SQL: How to Build Queries from Scratch

- Mapping GA4 conversions in BigQuery for comprehensive dashboarding

- Free course: Data modeling for GA4 BigQuery export with Activity Schema

- LLMs shouldn’t write SQL

- Technical Marketing Handbook (including a chapter about SQL & GA4)

- BigQuery View Generator (if you are using a JSON field with nested JSON objects, this tool generates a view for you).

- Efficient BigQuery Data Modeling: A Storage and Compute Comparison

- Finding the Most Valued Organic Keywords: A Data Story with GSC, GA4, and BigQuery

- PII vs on-site search data: Protect Against Data Loss and Empower Analytics with Google Cloud

- Mobile App Ecosystem: Introduction to App Stores, Firebase SDK, and Analytics (Firebase BigQuery export)

- How to build an Anomaly Detector using BigQuery

- Cute Dashboards, Big Bills: Exploring Looker Studio Costs with BigQuery Data Sources

- Document GA4 Events, Parameters & Annotations faster & easier

- Backfill-GA4-to-BigQuery (Github repo, not tested)

That's it for now. Thanks for reading and happy querying!

Best regards,

Johan