Hi there,

Ever since we reinvigorated the GA4BigQuery platform, I've been wanting to reinstate the regular newsletter as well. While articles have been regularly published on the website, and some (not all) of them have also been sent to members via e-mail, there is such a vast amount of news out there, and so many useful posts by members of the digital analytics community, that collating those into one place looked like a useful thing to do in its own right. So, after more than a year since newsletter #13, now we're back in action to keep an eye on the latest & greatest in BigQuery and digital analytics.

Note: Don't worry, I am not planning to start all future newsletters with a semi-emotional monologue like the above. Also note that there have been so many new features and changes since last year, that future newsletter editions—besides covering the latest news and posts—will also have to "work backwards" and cover information that may not be so recent anymore, but is still worth capturing. The current newsletter edition only covers news in July 2025—that too probably only partially, despite all my efforts.

Updates on the GA4BigQuery platform

It has been a while since I last published an article in email—but that doesn't mean that we've been sitting idle. A good few new articles and shorter posts have been published on the website. But when it comes to sending emails, I am trying to keep a good balance between "pushing" useful and valuable material into your inboxes, while not going too far and spamming you with too much content. If you have any feedback on that (too much? too little?), please do let me know.

Here is a list of articles published "quietly" in July:

We've also created more categories and started recategorizing some articles, for better navigation—to make it easier to find articles or relevance. Now there are new categories for ingesting data from sources other than GA4, analyzing Google Ads, engineering (cost management—but we'll have some Dataform content coming soon too) and some "plain" but useful SQL tips & tricks that can come handy in BigQuery as well. You can check that out the updated categories on the left side menu on the website. (Go to the home page to see it—or on any article page; it's not visible on the newsletter pages.)

BigQuery News

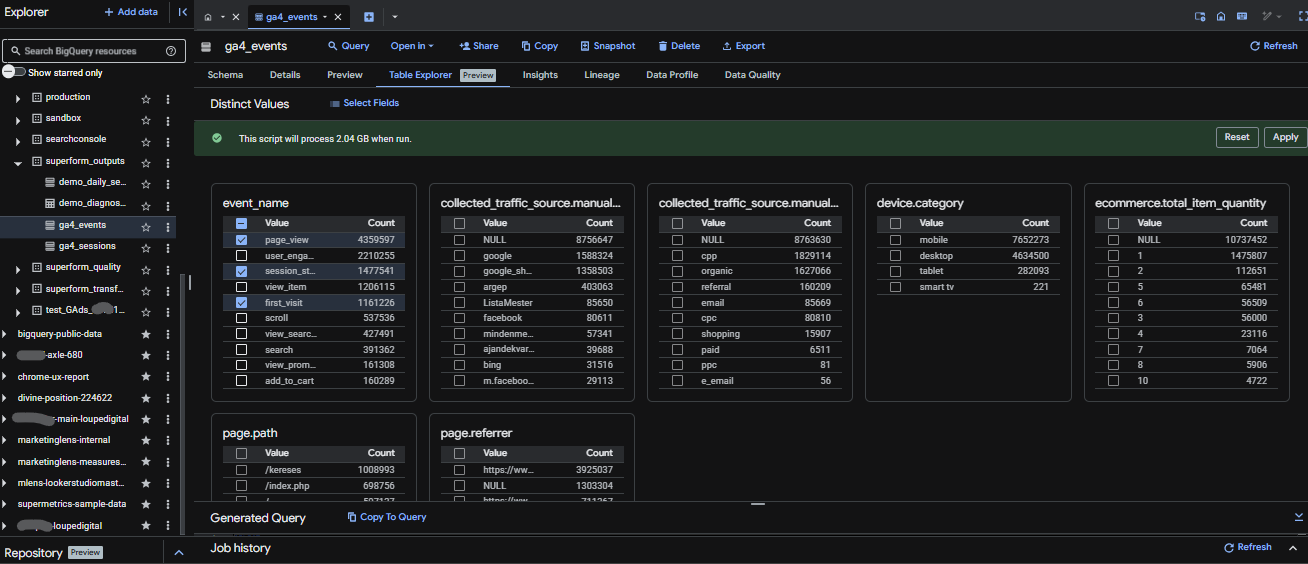

Table Explorer in BigQuery

A new functionality was added called Table Explorer, which makes it a lot faster to understand the nature of data in a table, by running multiple frequency analyses—you just need to click on the fields you're interested in, and the UI does it all for you. There is a LinkedIn post by Katie Kaczmarek which goes into slightly more detail.

Mid-August update: now the table explorer uses a partitioning filter, selecting only the last available partition by default. The risk of racking up costs by doing frequency analyses is much lower now. The above recommendations of course still apply.

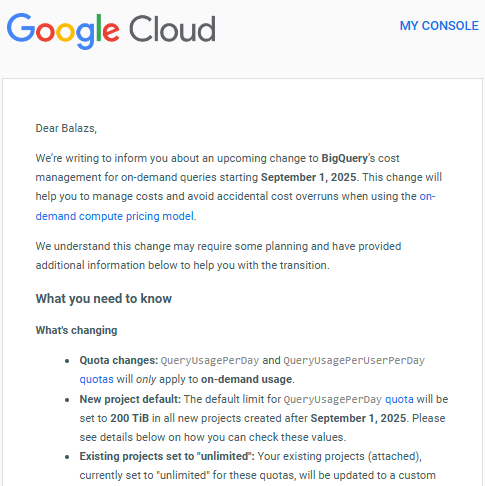

BigQuery Usage Quota limits will be automatically applied

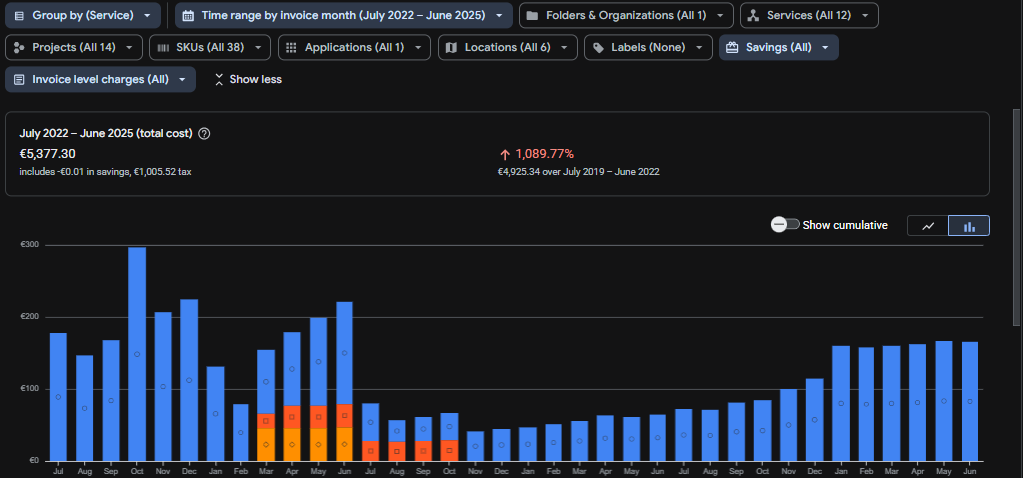

You might have seen the email from Google Cloud yourself. Starting from 1st September, new BigQuery projects will have a default usage quota of 200TB per day, and existing projects with no query limits will receive a quota based on previous usage activity.

While the automatic quotas will reduce the amount of "gore" in the BigQuery cost horror stories (like this one and this one), the biggest value here is highlighting the option of enforcing usage limits in the first place. In case you missed it, or the walkthrough to set up your own quota limits, it can be found below (and there is also some good discussion on LinkedIn here and also here):

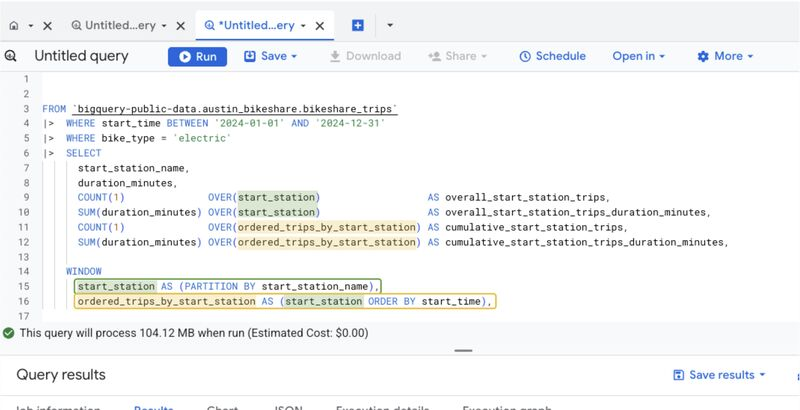

BigQuery Pipe syntax extended with new functionality

There is a new "revolution" underway by reimagining how SQL syntax is written, and it is widely available now in BigQuery. The pipe syntax takes some getting used to: here is a guide by Axel Thevenot to get the hang of it and have some best practices in your pocket. Now the pipe syntax allows the use of "named windows" and WITH clauses, which makes analysis even easier and the syntax more concise. Some people in the digital analytics community have reported noticeable performance improvements by going this route. Screenshot grabbed from Axel's post.

New SQL feature: MATCH_RECOGNIZE (and more)

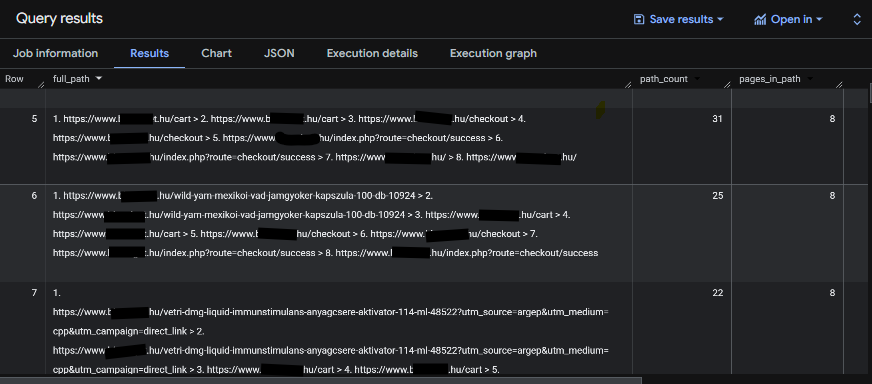

A new type of clause was added to BigQuery that can be used to retrieve (and optionally aggregate) a subset of rows retrieved from a table, based on finding a pattern of sequential rows. I guess conceptually, in the sense of subsetting rows, it's a "relative" of the WHERE, HAVING and QUALIFY clauses, and it is also related to window functions in the way we have to PARTITION and ORDER BY to generate the "framework" in which the patterns in question are sought.

I haven't had the chance to play with this myself yet, so right now Luka Cempre's LinkedIn article is probably the best guide (that I know of currently—feel free to refer others in the comment section). Jobair Mahmud also reported on other new features around the same time, e.g. VECTOR_INDEX.STATISTICS (for detecting data drift in indexed tables), ALTER VECTOR INDEX REBUILD (for rebuild vector indexes), new LOAD DATA options and others (beside mentioning the above discussed MATCH_RECOGNIZE as well. I haven't tried these either and would be very happy to receive tips on where they can come useful.

AI.GENERATE_<> functions

New functions have been released to leverage the power of LLMs (Gemini) in various ways, e.g. to process a series of prompts on a larger scale (AI.GENERATE), fill a table with pre-defined structure with LLM results (AI.GENERATE_TABLE), perform binary classification using LLM (AI.GENERATE_BOOL), or even generating numeric outputs (AI.GENERATE_INT and AI.GENERATE_DOUBLE). For more detail, check out Katie Kaczmarek's LinkedIn post.

There are more AI functions one can try, namely AI.FORECAST(), which can be used for extrapolating trends without training your own model. Katie explains its use on their company blog, that too using GA4 data, so it's worth a read if you want to broaden your horizon.

Do note that some of this functionality is also available in BigFunctions by Unytics, a collection of custom user-defined functions already deployed on BigQuery (so you can just call those functions without having to install or set up anything). Positively mind-blowing stuff.

MCP for BigQuery: enabling conversations powered by your data

Of course, these days, no day or week goes by without a significant development in the AI space, but this one is actually interesting and relevant: Google made an MCP available to connect LLM models to BigQuery. This carries a great deal of opportunities, but I haven't tried anything on this front, so I don't have a way to go any deeper here.

What is an MCP, you might ask? It's an acronym for "Model Context Protocol", but instead of me trying to scramble together an explanation, please see Katie's post explaining MCPs in more detail. Ali Ahmed (another prolific content & solution creator) reported that an MCP has been created for Google Analytics too, so that you can directly talk to your GA4 data! Some people have tried it and have "mixed" experiences, but one definitely has to watch this space as it improves.

Recent content about GA4 and BigQuery

Ours is a vibrant community so let's have a look at some of the posts that have come my way on this topic.

- A video about attribution modelling by Kisholoy Mukhopadhyay, using GA4 data in BigQuery using Python (via Google Colab).

- One of the ways Looker Studio itself can be responsible for a crazy amount of cloud costs, and how to prevent that from happening (this is not GA4 analysis per se, but can happen with monstrously big GA4 accounts). By Siavash Kanani, who brought us Looker Studio Masterclass.

- How discrepancies can occur between

collected_traffic_sourceandsession_traffic_source_last_click, by one of the other loud voices on attribution, Alex Ignatenko. - How to check the impact of Consent Mode on your tracking using the GA4 export in BigQuery, by Július Selnekovič. With code. Checking the consent rate can be really useful in Consent Mode vs tag firing timing issues.

Other digital analytics updates by the community

Now that we've covered a lot about BigQuery, there is probably merit in broadening the scope and checking out a few other digital analytics updates, which though may not be directly (or even indirectly) related to BigQuery, but are worth being aware of by practitioners in this field.

- Editing annotations in Google Analytics

- An "anecdote" on instilling action from data

- The best movie-inspired memes in digital analytics (def read this one if you need a good laugh!)

- Standard lead reports added to GA4 (based on the new GA4 lead events)

- Update on GA4Dataform: subscribe to get the release date and pricing when it's revealed (direct subscribe link here)

- Practical Ways to Create Value from Data

- Chrome extension to filter internal traffic

- New version of Watson released: v7 Pro (if you don't know what Watson is, do check it out, even the free tool is very useful)

- Google Trends API alpha release

- Why your Measurement Protocol events might be missing—an important gotcha, worth keeping an eye!

Closing remarks

Now, that was quite a lot—the community has been very active, and the amount of knowledge being shared is nothing short of amazing. As mentioned above, feel free to add a comment below if you think any useful updates were missing (even if those updates / posts / articles were written by you; no shame in broadcasting one's own content, if it might prove useful or save time for some people).

August has already been very vibrant too; looking forward to a long night of writing it all up 😄

Also keep in mind that MeasureSummit 2025 is on the corner—worth grabbing the Early Bird tickets that allows you to (re-)watch sessions after the event ends; there are always so many useful ones, almost impossible to watch them all in 3 days. Superweek tickets are out too if you're in for an immersive analytics conference experience in early 2026.

Enjoy your summer (northern hemisphere folks; hope winter is not too bad for our southern hemisphere subscribers either), and all the best until next time,

Best regards,

Balazs