Advanced ways to operate the Google Ads Data Transfer

This article is a "sequel" (pun very much intended) to the first Google Ads Data Transfer configuration tutorial, diving into some deeper settings and know-how for advanced use cases.

The Google Ads Data Transfer is a very handy feature, and is probably the most straightforward way to import Google Ads data into BigQuery. Google Ads data is also probably one of the most important data sources beside GA4, since a lot of the marketing budget is spent here, and companies rightfully want to stay on top of its performance.

Another, earlier tutorial deals with the setup mechanism. It was updated in late June 2025 with some "recent" changes and additional information. If you are just getting started, please read this first:

The present article dives deeper into the settings and provides some more context and tips. This guide focuses on the transfer setup only; more articles will be coming to cover the structure of the Google Ads transfer "schema" (spoiler alert: it's not actually a "schema") and show various ways it can be used to extract valuable information from the depths of Google Ads' data.

In this guide, you'll read the following:

- A way to get the Google Ads backfill finish quicker when a larger period of time needs to be backfilled (e.g. 6-8 months)

- A way to avoid creating dozens of datasets, when you have many Google Ads accounts.

- Some considerations around naming conventions of Google Ads dataset and transfer names.

- How to limit the number of tables if you don't need all 109 of them.

- A faster way to launch backfills using the Cloud Shell terminal (with code).

- The expected cost of using the Google Ads Data Transfer. (With code to check the size of your own datasets.)

- Why you should enable notification emails on the transfer and act on them the same day. (And also why you should not wait with setting up the transfer—you should set it up right away to avoid losing data, like with GA4 and GSC.)

- Using GAQL and the "Custom" reporting option to pull information that is not available from the standard transfer schema.

Let's get started.

Reducing the time it takes to backfill Google Ads data

Usually the first thing after setting up a Google Ads transfer configuration is to launch a backfill as far back as possible, e.g. 2 years. While Google Ads will let you pull data from the API (and thus pull data using the data transfer) as far back as you want with no limits, it has two limitations:

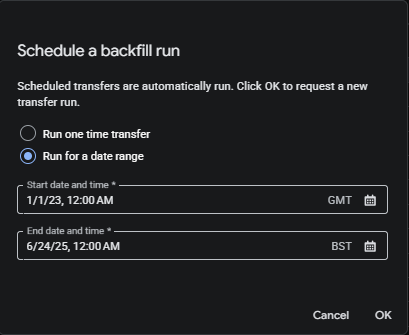

(1) There is a limited number of backfill jobs that can be queued at a time. If you specify too long an interval to backfill (e.g. a whole year), then it will run into an error. Let's see an example of trying to backfill two years:

The error message is somewhat unclear and perhaps even a little weird (I haven't figured out what that "303" is when the actual number of days / load jobs between the specified dates is 906); this is normal, all it means you need to specify a shorter period, e.g. 6-8 months at a time. It is also worth considering that backfilling 8 months' worth of data means that ~240 days need to be loaded, which takes us to the second limitation:

(2) transfer jobs in a sequence are scheduled 35 minutes apart from each other. This means that an 8 month backfill will take a bit less than 6 days to complete.

This may not be a problem as it'll work away in the background, but if you need it sooner ("I need the report by yesterday"), there is a way to trick the system: instead of setting up the whole period in one go, you can split it up in chunks and schedule multiple smaller intervals. E.g. you can run the "schedule backfill" 4 times, setting up 2 months in each one, and scheduling them to be 8 minutes apart from each other. (Running them concurrently should be fine too, but as a precaution it's good to give a few minutes to each run.) This way, the whole 8 months will be loaded in 35 hours i.e. a day and a half.

What to do when you operate multiple Google Ads accounts

In such a case, usually these accounts – given that they belong to the same organization – are arranged under an MCC account. If it is possible, get access to and ask for the account ID ("customer ID") of the MCC account, and run the transfer on that instead of the individual accounts.

If it is not possible, then you'll have to set up multiple transfers. It's not the end of the world, but will increase the amount of legwork involved in dealing with the data.

How to name datasets and transfer jobs

This is a subjective topic of course. And almost doesn't matter at all when you have a single Google Ads account. But if you:

- load data from multiple Google Ads accounts into the same BigQuery project

- use multiple third party connectors

- load data from multiple sources

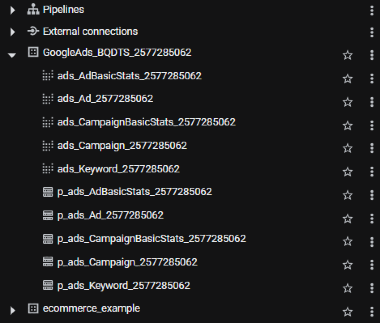

then the naming convention might matter to keep things organized. one method that works well is to build the name from the following elements: the platform (data source), the import mechanism ("tool") and the actual account the data is being pulled from. E.g. something like these:

GoogleAds_BQDataTransfer_1234567890GoogleAds_Supermetrics_1234567890

If you load data from many Google Ads accounts, then including the descriptive name of the account into the dataset/transfer might also help, e.g. when you're told to backfill a particular one, and they specify the account by its name, then it'll be easier to find the right transfer if the account name is included in the transfer name too. Otherwise you'll first have to look up the ID, which can be kinda irritating.

Also, as implicitly alluded to in the above, it makes it easy to maintain the logical connection between the data transfer and the resulting dataset if they are named exactly the same.

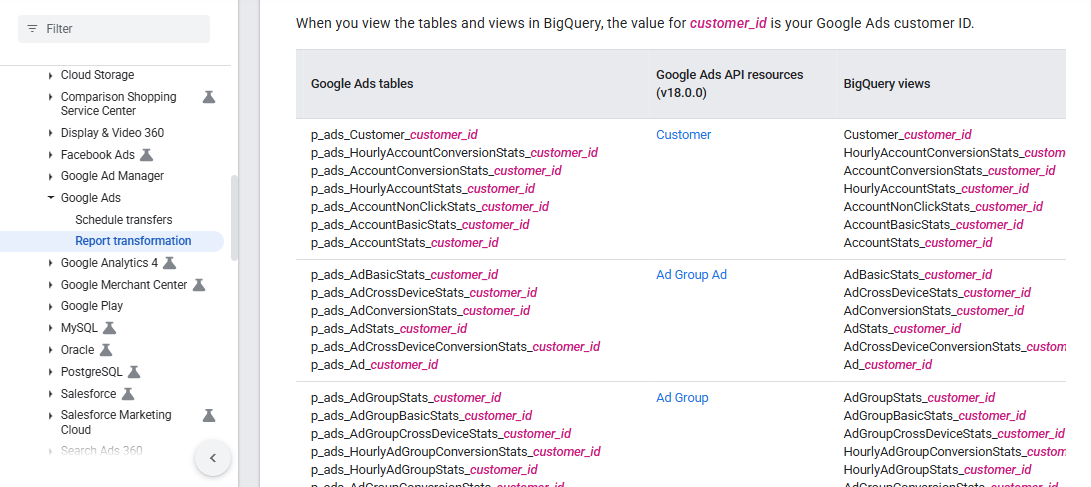

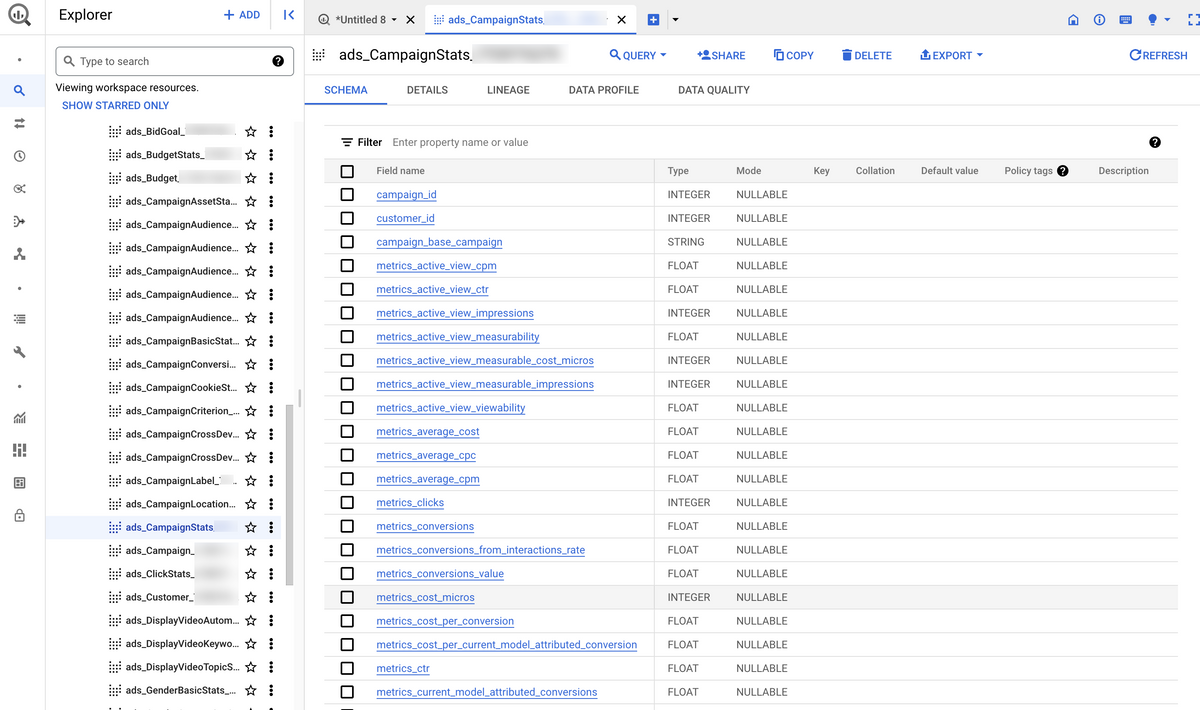

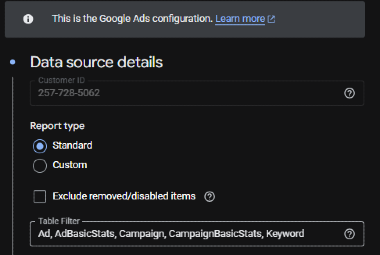

"There are too many tables and I don't need all of them"

There is a way to limit the tables you want to load with the transfer. While I can sympathize with the feeling of getting overwhelmed by the endless list of tables when you first open the Google Ads dataset, I'd start with a generic advice to not exclude any tables. Google Ads data is small in size, it's quite well thought out, and has everything you might ever need. But the option to limit it is there for niche use cases.

ClickStats table, because it's comparatively large... but it's rather useful in return, so again, I don't recommend dropping it upfront. By launching this transfer we get a much narrower list of tables:

Scheduling backfills using the Cloud Shell & an important use case where it is relevant

Even if you're not so much into scripting in general, this method can come handy sometimes. For example, for longer sales cycles (where a long time elapses between seeing the ad and the purchase), the default 7-day (or even up to 30 day) refresh window is not enough to keep the data up to date.

E.g. the number of conversions on 1st Jan 2025 might be 50 when you check it on 10th January, but might be 100 when you check it at the end of the month. (This is an illustrative theoretical example, but it can happen, especially for businesses with longer sales cycles.)

We can easily think of some business verticals where this applies:

- B2B companies - fewer, larger deals (often a process for offline conversions is also needed here to properly see them in Google Ads)

- B2C companies with expensive products that people think through before buying

- Mobile apps with freemium model (a good few weeks may pass until the customer decides to subscribe to the paid version)

- [...]

In such cases, it is important to run a backfill regularly, like every month or every couple of days. While it doesn't take long to set up a backfill manually and it's OK as a once-off, it can be irritating if it becomes a repetitive exercise. This is where running cloud shell scripts can help.

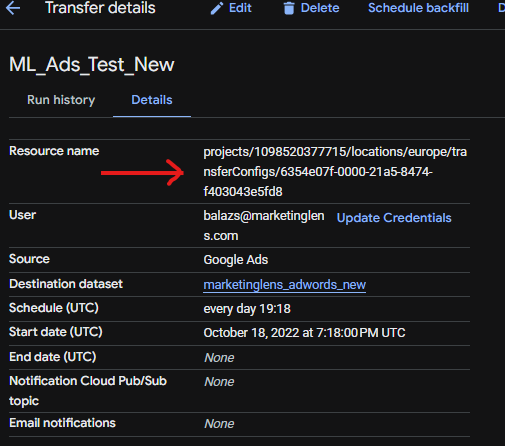

Before jumping to the code snippet, we'll need to retrieve the unique identifier for the transfer config:

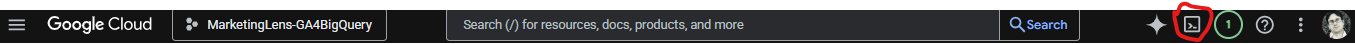

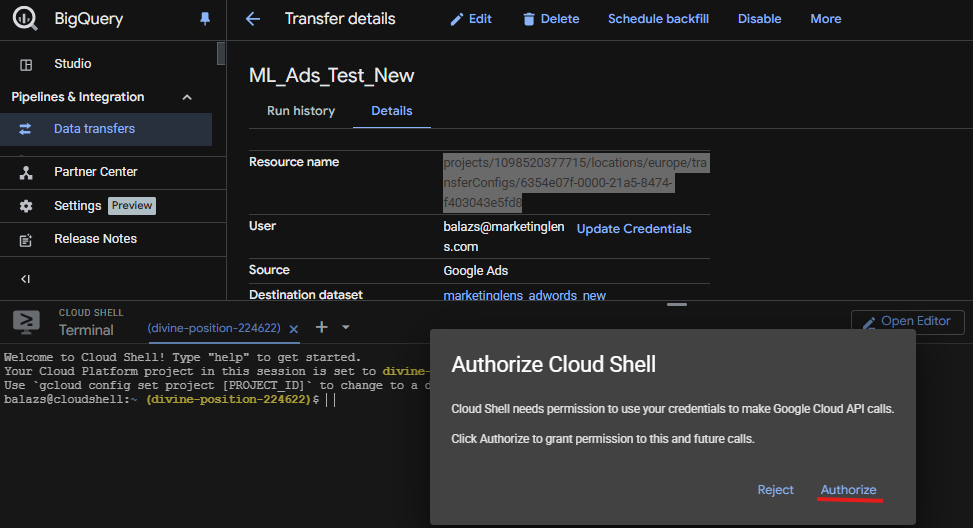

Now open the cloud shell in the top menu bar and authorize it:

And now this is the code snippet that needs to be run (change the dates and the resource ID and copy-paste):