How to flatten the GA4 BigQuery export schema for usage in relational databases

In this tutorial I will show you - step by step - how to flatten all fields of the Google Analytics 4 export schema with the purpose of using the data in a relational database outside of BigQuery.

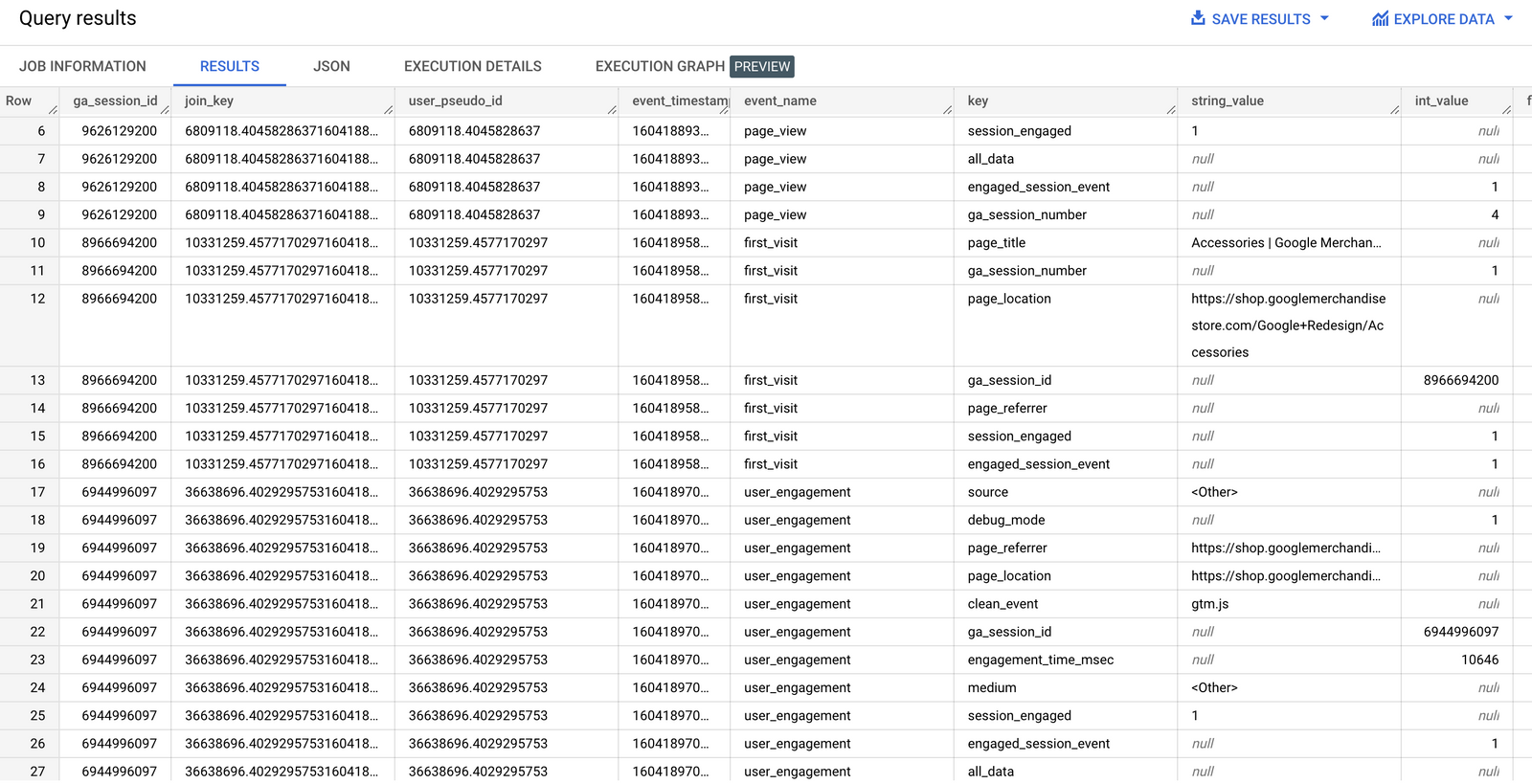

As you might have noticed the Google Analytics 4 export in BigQuery is containing nested and repeated fields. This is fine when you know how to query this (this website provides lots of example queries), but becomes an issue when you want to send the data to another (relational) database, like Redshift or Snowflake.

In this tutorial I will show you - step by step - how to flatten all fields of the Google Analytics 4 export schema with the purpose of using the data in a relational database outside of BigQuery.

I was inspired by this solution for flattening the Universal Analytics export schema.

For those who want to import GA data to a relational database: Please be aware that a nested schema is actually putting multiple relational tables into one nested structure - they are equivalent, so plain flattening is not the best solution here: you want to separate the tables again! That way you save a lot of space in storage and you queries might get faster.

The Google Analytics 4 schema is quite different, but we'll adapt this idea to make it work for us.

Explore schema

Let's take a look at the schema for a single recent export table:

select

column_name,

ordinal_position,

data_type

from

-- change this to your google analytics 4 export location in bigquery

`ga4bigquery.analytics_250794857`.INFORMATION_SCHEMA.COLUMNS

where

table_name = concat('events_',format_date('%Y%m%d',date_sub(current_date(), interval 3 day)))A nested field will have the data type STRUCT and a repeated field will show an ARRAY data type. Looking at the results of our query we can conclude that three columns contain repeated fields: event_params, user_properties and items.

| column_name | ordinal_position | data_type |

|---|---|---|

| event_date | 1 | STRING |

| event_timestamp | 2 | INT64 |

| event_name | 3 | STRING |

| event_params | 4 | ARRAY<STRUCT<key STRING, value STRUCT<string_value STRING, int_value INT64, float_value FLOAT64, double_value FLOAT64>>> |

| event_previous_timestamp | 5 | INT64 |

| event_value_in_usd | 6 | FLOAT64 |

| event_bundle_sequence_id | 7 | INT64 |

| event_server_timestamp_offset | 8 | INT64 |

| user_id | 9 | STRING |

| user_pseudo_id | 10 | STRING |

| privacy_info | 11 | STRUCT<analytics_storage STRING, ads_storage STRING, uses_transient_token STRING> |

| user_properties | 12 | ARRAY<STRUCT<key STRING, value STRUCT<string_value STRING, int_value INT64, float_value FLOAT64, double_value FLOAT64, set_timestamp_micros INT64>>> |

| user_first_touch_timestamp | 13 | INT64 |

| user_ltv | 14 | STRUCT<revenue FLOAT64, currency STRING> |

| device | 15 | STRUCT<category STRING, mobile_brand_name STRING, mobile_model_name STRING, mobile_marketing_name STRING, mobile_os_hardware_model STRING, operating_system STRING, operating_system_version STRING, vendor_id STRING, advertising_id STRING, language STRING, is_limited_ad_tracking STRING, time_zone_offset_seconds INT64, browser STRING, browser_version STRING, web_info STRUCT<browser STRING, browser_version STRING, hostname STRING>> |

| geo | 16 | STRUCT<continent STRING, country STRING, region STRING, city STRING, sub_continent STRING, metro STRING> |

| app_info | 17 | STRUCT<id STRING, version STRING, install_store STRING, firebase_app_id STRING, install_source STRING> |

| traffic_source | 18 | STRUCT<name STRING, medium STRING, source STRING> |

| stream_id | 19 | STRING |

| platform | 20 | STRING |

| event_dimensions | 21 | STRUCT |

| ecommerce | 22 | STRUCT<total_item_quantity INT64, purchase_revenue_in_usd FLOAT64, purchase_revenue FLOAT64, refund_value_in_usd FLOAT64, refund_value FLOAT64, shipping_value_in_usd FLOAT64, shipping_value FLOAT64, tax_value_in_usd FLOAT64, tax_value FLOAT64, unique_items INT64, transaction_id STRING> |

| items | 23 | ARRAY<STRUCT<item_id STRING, item_name STRING, item_brand STRING, item_variant STRING, item_category STRING, item_category2 STRING, item_category3 STRING, item_category4 STRING, item_category5 STRING, price_in_usd FLOAT64, price FLOAT64, quantity INT64, item_revenue_in_usd FLOAT64, item_revenue FLOAT64, item_refund_in_usd FLOAT64, item_refund FLOAT64, coupon STRING, affiliation STRING, location_id STRING, item_list_id STRING, item_list_name STRING, item_list_index STRING, promotion_id STRING, promotion_name STRING, creative_name STRING, creative_slot STRING>> |

| collected_traffic_source | 24 | STRUCT<manual_campaign_id STRING, manual_campaign_name STRING, manual_source STRING, manual_medium STRING, manual_term STRING, manual_content STRING, gclid STRING, dclid STRING, srsltid STRING> |

| is_active_user | 25 | BOOL |

Define our strategy

If we want to use the GA4 export schema in a relational database, we will need four tables:

- flat_events

- flat_event_params

- flat_user_properties

- flat_items

As GA4 is an event driven analytics tool, the events table is our base: it will contain all top level data about users, events, device, traffic source, ecommerce transactions and so on.