Backfilling Data Transfers using the Cloud Shell

This tutorial shows how you can accelerate Data Transfer backfills using a purpose-built Cloud Shell script, and thereby save a significant amount of time (Facebook) or reducing the time you need to wait for all the data to be loaded (Google Ads).

Time is money, as the lore says. Consequently, we data analysts and engineers (and marketers) don't like waiting, and we especially don't appreciate unnecessary manual work.

Unfortunately, there are aspects of the BigQuery Data Transfers that make us wait, or give us manual work. Here are two examples:

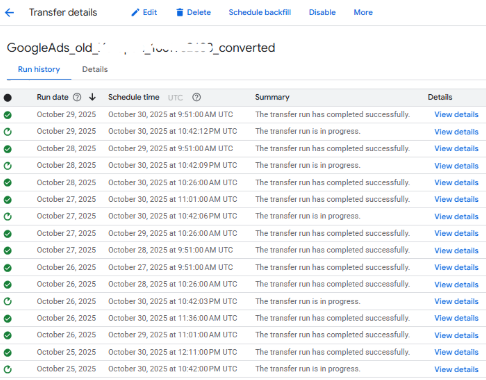

- When you backfill a Google Ads transfer, the sequential load jobs are scheduled 35 minutes apart from each other. While it's still "convenient enough" in the sense that you can set up 6-8 months' worth of transfer jobs in one go, but you need to wait a couple of days for all that data to load.

- One of the most notable shortcomings of the Facebook Ads Data Transfer is that you can only backfill 1 day at a time, and you cannot (even manually) put more backfill jobs into the queue. This means if you want to backfill 2 months' worth of Facebook data (which is not even that much), you'd need to manually trigger a load job every 5 minutes, ~60 times. Even if you do other things in the meantime, you will have to split your attention for (ideally) 5 hours.

Neither scenario "cuts it", but fortunately there is a hack: you can schedule transfer jobs using BigQuery's (or GCP's) Cloud Shell terminal (also called CLI, as in "Command Line Interface"). Let's see how this makes life easier.