How to set your usage quota in BigQuery to avoid excess cost

This article shows how you can limit query usage in BigQuery projects, to avoid unpleasant (or outright catastrophic) surprises on your GCP cloud bill.

This article was inspired by the recent news that Google will automatically apply usage quotas for new BigQuery projects and also existing ones where a usage quota has not been set up previously (and the quota limit is currently "undefined")—but since the default limits will still be too high in my opinion, I thought it might be useful to show how you can set a more stringent quota for individual users and/or across the project. Also, why wait until September, when in a few minutes you can take out all the risk right away.

Why we need to bother with quotas

One of the first things that should happen when you set up a BigQuery project, is to protect against unexpected cloud bills. Cloud cost surprises can occur in two main ways (and of course anything "in between" the below two "extremes"):

- You (or someone with access to the project) accidentally or purposefully queries an enormous dataset. For new BigQuery projects, this is very unlikely to happen, especially when working with digital marketing (including GA4 or other high volume, event level data). Some horror stories have been reported with enormous accidental bills; the way they managed to do this was by picking possibly the worst possible table in the

bigquery-public-dataproject for playing with queries (and doing aSELECT *query without any filters, assuming that theLIMITstatement limits the billed queries - which it doesn't).

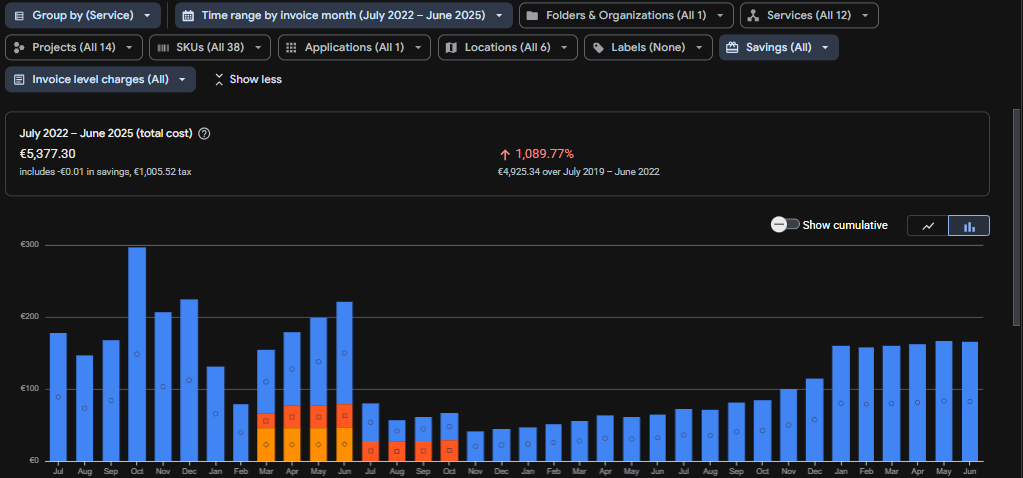

TABLESAMPLE SYSTEM (1 PERCENT) clause after the table name in the FROM clause. The LIMIT statement only limits the rows displayed in the output, not the bytes queried.- There is an old BigQuery project where GA4 data has been exported (from a large traffic property) for a long time, and data analysts start analyzing this data intensively. Perhaps a new dashboard is created and implemented with massive attribution or other processing logic in the data pipeline. GA4 data is not crazy big in terms of storage, but it can build up over time e.g. for websites where there are more than a million events every day. (This is GA360 territory which comes with additional cost, but now we're focusing on BigQuery only.) This doesn't usually cause a sudden spike, it's more of a gradual build-up, but the budget owner for the cloud bill might get a surprise when the bill first passes a few thousand $s.

Setting quota limits upfront protects against both scenarios, so it's really good practice to do this after setting up the project, or as soon as you become aware of this feature.

Setting up the quota limits

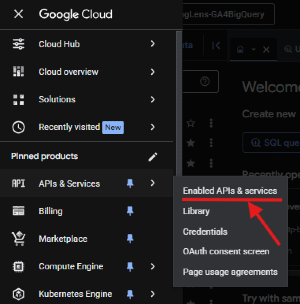

Here is how you set up the protective guardrails. First, click the hamburger menu on the top left and select "Enabled APIs & services" from "APIs & Services".

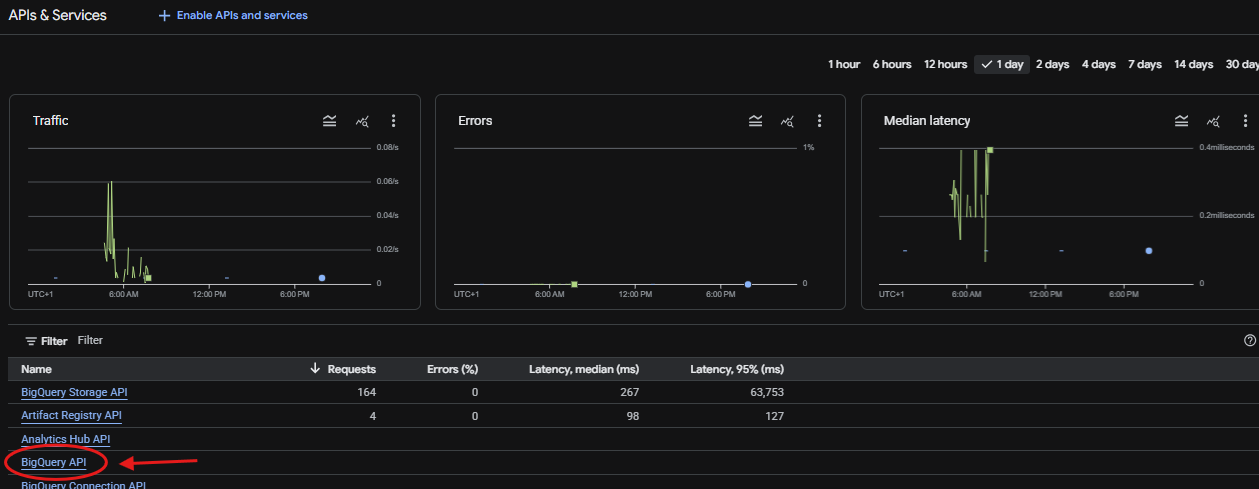

Select BigQuery API from the list.

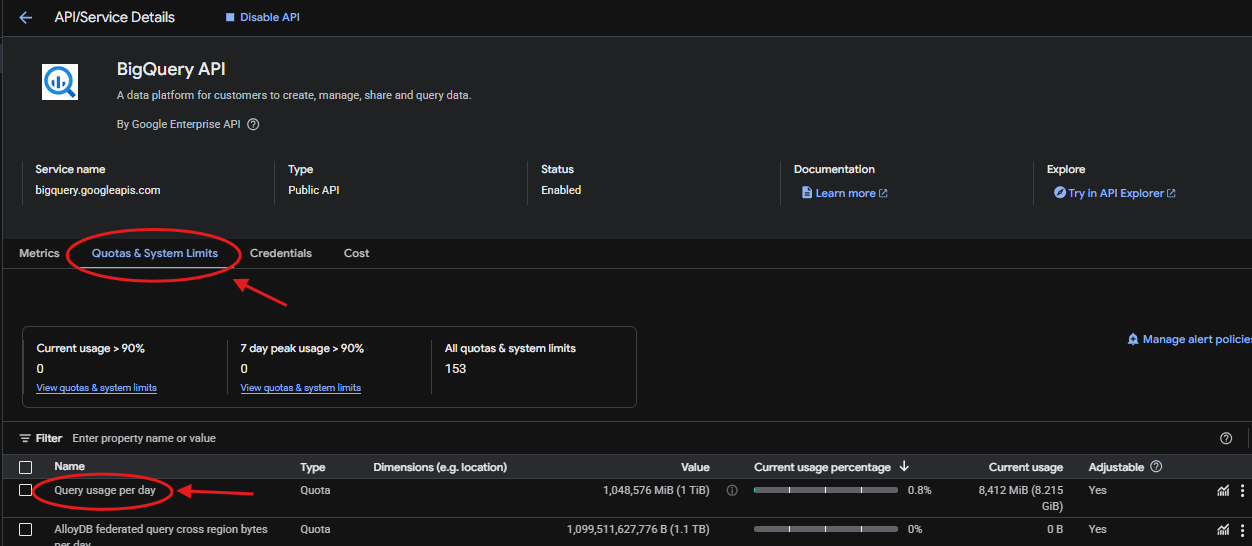

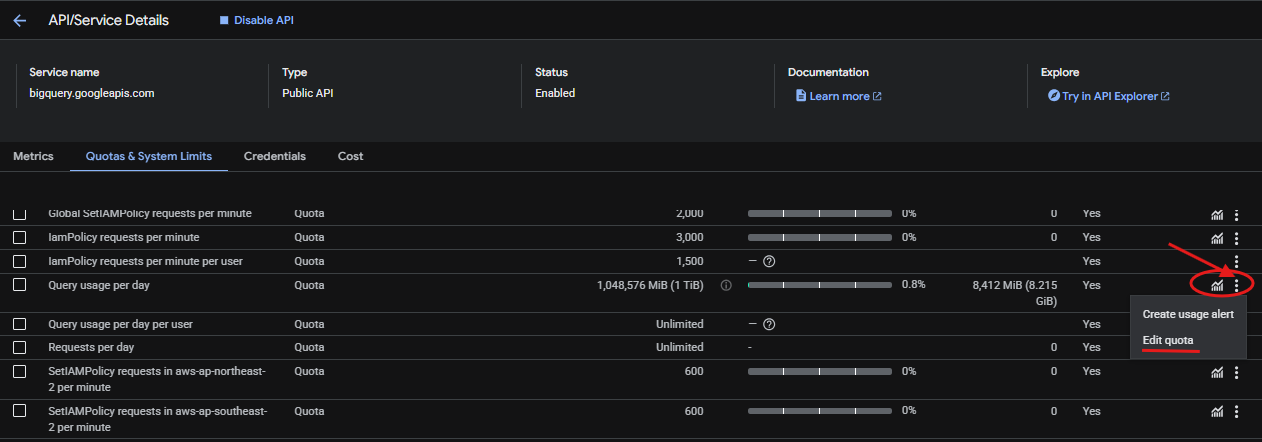

Now, go to "Quotas & System Limits" in the menu below the BigQuery API banner.

The list of properties appears in the form of a table on the bottom, with all sorts of things in there that can be limited. It might help to sort the table to find what we need. There are two things we're looking for:

- Query usage per day: the overall number of bytes that can be queried in the project over the span of a day.

- Query usage per day per user: the number of bytes that can be queried by each individual user in the project, in a day.

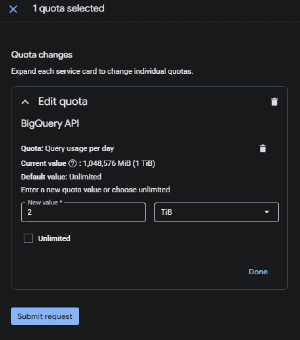

Set a limit on at least one of them by clicking the 3 dot menu on the right and picking "Edit quota", then setting the new value in the pane that pops up on the right:

In this example, I have already set the quotas (a few months ago, when the aforementioned $10000 bill story came out). If you haven't used this feature before, the current value will most likely be "Unlimited".

If it's a brand new project, I recommend giving a relatively low limit, e.g. 1TB. If it's an established project, then of course you need to think about the usual level of activity and then come up with a threshold based on actual usage. With a limit of 1TB, someone will shout sooner or later, but chances are it'll be quite far in the future, and it's better to have this risk than someone racking up big cost pile perhaps by exploring the public datasets (or your own ones if you upload massive data into BigQuery) and querying tables carelessly.

Now it's your turn!

Do this for all the projects you own—and then you can sleep tight. I hope you've found this article helpful. Please drop a line in the comments if you have any questions, feedback or suggestions related to this article.